Failure of Fully Autonomous Robotic Services

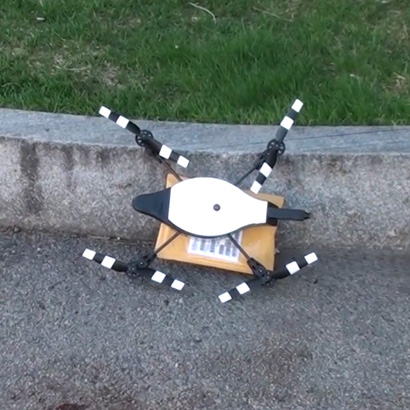

Fully autonomous robots are progressively becoming capable of operating in the unstructured environments of everyday life. The expanding presence of such systems will dramatically increase the occurrences of non-expert human-robot interactions as self-driving cars, delivery drones, robot vacuums, and many more become integrated into society. However, even the most reliable of these systems will not be immune to occasional failures, and the manner in which they fail can seriously effect users’ perception of those systems and the services they provide. Studies indicate that failure by a robotic service lowers users’ trust and can make them reluctant to use the service again. Other potential negative consequences of failures include negative feelings toward the service, inability to recover the task that was being performed, inability to diagnose what went wrong and to fix the problem, and feelings of helplessness or uncertainty. Unexpected behaviors resulting from failing autonomous robots can also generate negative side effects. Aside from the possibility of causing additional problems or even being potentially dangerous, unexpected behaviors can have far reaching consequences such as instigating social conflicts between people.

Fully autonomous robots are progressively becoming capable of operating in the unstructured environments of everyday life. The expanding presence of such systems will dramatically increase the occurrences of non-expert human-robot interactions as self-driving cars, delivery drones, robot vacuums, and many more become integrated into society. However, even the most reliable of these systems will not be immune to occasional failures, and the manner in which they fail can seriously effect users’ perception of those systems and the services they provide. Studies indicate that failure by a robotic service lowers users’ trust and can make them reluctant to use the service again. Other potential negative consequences of failures include negative feelings toward the service, inability to recover the task that was being performed, inability to diagnose what went wrong and to fix the problem, and feelings of helplessness or uncertainty. Unexpected behaviors resulting from failing autonomous robots can also generate negative side effects. Aside from the possibility of causing additional problems or even being potentially dangerous, unexpected behaviors can have far reaching consequences such as instigating social conflicts between people.

Ultimately, both teleoperated and fully autonomous robots are being used by humans to perform tasks on their behalf. In the case of fully autonomous robots, an operator or client entrusts a task or responsibility to the system which they expect to be carried out. The relationship between the operator and the system can be thought of as a form of delegation since many tasks require the use of some level of discretion while being carried out. One of the consequences of a failure occurring in fully autonomous robot system is a deterioration in the task performance, possibly to the point that the task can no longer be performed. However, an autonomous robot may still be able to take actions that can assist in furthering the task towards completion even in conditions where a failure has rendered the system incapable of carrying it out on its own.

As autonomy increases, the amount of time the system can run while being ignored by the operator (known as neglect-time) also increases, which in turn can decrease the amount of attention the operator pays to the system at any given moment. If a system is sufficiently autonomous such that the operator rarely pays any attention to it at all, it would be considered unsupervised. When a problem occurs in such a system, the person or people responsible for the robot’s operation find themselves lacking sufficient knowledge to either understand the current problem or identify the appropriate actions that need to be taken - a phenomenon known as the out-of-the-loop problem. As fully autonomous robotic services are integrated into society, they will need to be capable of interacting with people in a variety of relationships with the robot and not just the operator. These people will require different kinds of information and expect different kinds of recovery behaviors based on their relationship with the robot.

We are currently working on establishing a theory to describe how a fully autonomous robotic service should abstractly behave when experiencing a range of failure conditions to mitigate the negative impacts of the situation (e.g. preserve trust, reduce negative feelings and confusion of operator and bystanders, propagate or enable recovery of the task being carried out, etc.). We hypothesize that recovery behaviors and communications designed to 1) continue support of the service the robot was providing, and 2) support people's situation awareness based on their relationship with the robot, will help mitigate the negative effects caused by a failure.