Multi-touch Technologies for Human-Robot Interaction

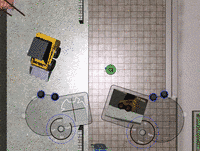

- Robot selection by lasso, one of many selection methods

- Manual control of robot via DREAM Controller

- Screenshot of the iPad manual driving interface (click to enlarge)

In

emergency response, gathering intelligence is still largely a manual

process despite advances in mobile computing and multi-touch

interaction. Personnel in the field take notes on paper maps which

are then manually correlated to larger paper maps that are eventually

digested by global information systems (GIS) specialists who

geo-reference the data by hand. Unfortunately, this analog process

is personnel intensive and can often take twelve to twenty-four hours

to get the data from the field to the daily operation briefings.

This required processing means that the information digested by

personnel going into the field is at least an operational period out of

date. In a day where satellite photography and mobile connectivity is

becoming ubiquitous in our digital lives, we believe that we can improve

this state of the practice for most disciplines of emergency response.

Our

goal is to integrate multiple digital sources into a common computing

platform that provides two-way communication, tracking, and mission

status to the personnel in the field and the incident command hierarchy

that supports them. Advanced technology such as geo-referenced

digital photography and ground robots are still limited to sending video

only to the operators at the site, but this limitation is changing

quickly. Recent advances in robotics, mobile communication, and

multi-touch displays are bridging these technological gaps and providing

enhanced network centric operation and increased mission effectiveness.

Our research in human computer interaction leverages these technologies through the use of several different types of multi-touch displays. A single-robot operator control unit and a multi-robot command and control interface has been created. It is used to monitor and interact with all of the robots deployed at a disaster response. Users tap and drag commands for individual or multiple robots through a gesture set designed to maximize ease of learning. A trail of waypoints can provide specific areas of interest or a specific path can be drawn for the robots to follow. The system is designed as discrete modules allow integration of a variety of data sets like city maps, building blueprints, and other geo-referenced data sources. Users can pan and zoom on any area, and it can integrate video feeds from individual robots so you can see things from their perspective.

Manual robot control is achieved by using the DREAM (Dynamically Resizing Ergonomic and Multi-touch) Controller. The controller is virtually painted beneath the user's hands, changing its size and orientation according to our newly designed algorithm for fast hand detection, finger registration, and handedness registration. In addition to robot control, the DREAM Controller and hand detection algorithms have a wide number of applications in general human computer interaction such as keyboard emulation and multi-touch user interface design.

Current work is moving from simulated robots to real robots. Having successfully controlled multiple robots with our three main multi-touch devices (Microsoft Surface, Apple iPad, and 3M Multi-Touch Displays) work is now being done to integrate these technologies to work together simultaneously.

VideosTo watch the videos click the thumbnail or video title. |

|

Collaborative Control of Simulated RobotsUsing the Microsoft Surface and Apple iPad to collaboratively control simulated robots. |

iPad Robot ControlDriving an ATRV robot ("Junior") using our iPad virtual joystick interface. |

Multi-Touch AR DroneUsing the DREAM Controller on Microsoft Surface to pilot an AR Drone. |

Multi-Robot ControlExamples of the gestures used to control multiple robots using the Microsoft Surface and Microsoft Robot Developer Studio. |

Single Robot ControlUsing the DREAM Controller on Microsoft Surface to control a 120 pound ATRV robot in a 200 square foot maze. |

Mark Micire DissertationMark Micire gives his dissertation defense on "Multi-Touch Interaction for Robot Command and Control." |

Related Papers

Mark Micire, Eric McCann, Munjal Desai, Katherine M. Tsui, Adam Norton, and Holly A. Yanco. Hand and Finger Registration for Multi-Touch Joysticks on Software-Based Operator Control Units. Proceedings of the IEEE International Conference on Technologies for Practical Robot Applications, Woburn, MA, April 2011.

Mark Micire, Munjal Desai, Jill L. Drury, Eric McCann, Adam Norton, Katherine M. Tsui, and Holly A. Yanco. Design and Validation of Two-Handed Multi-Touch Tabletop Controllers for Robot Teleoperation. Proceedings of the International Conference on Intelligent User Interfaces, Palo Alto, CA, February 13-16, 2011.

Brenden Keyes, Mark Micire, Jill L. Drury and Holly A. Yanco. Improving Human-Robot Interaction through Interface Evolution. Human-Robot Interaction, Daisuke Chugo (Ed.), INTECH, 2010.

Mark Micire, Munjal Desai, Amanda Courtemanche, Katherine M. Tsui, and Holly A. Yanco. Analysis of Natural Gestures for Controlling Robot Teams on Multi-touch Tabletop Surfaces. ACM International Conference on Interactive Tabletops and Surfaces, Banff, Alberta, November 23–25, 2009.

Mark Micire, Jill L. Drury, Brenden Keyes, and Holly Yanco. Multi-Touch Interaction for Robot Control. International Conference on Intelligent User Interfaces (IUI), Sanibel Island, Florida, February 8–11, 2009.

Mark Micire. Evolution and Field Performance of a Rescue Robot. Journal of Field Robotics, Volume 25, Issue 1-2, p. 17-30.

Mark Micire, Jill L. Drury, Brenden Keyes, Holly Yanco, and Amanda Courtemanche. Performance of Multi-Touch Table Interaction and Physically Situated Robot Agents. Poster presentation at the 3rd ACM/IEEE International Conference on Human-Robot Interaction, Amsterdam, March 2008.

Amanda Courtemanche, Mark Micire and Holly Yanco. Human-Robot Interaction using a Multi-Touch Display. 2nd Annual IEEE Tabletop Workshop, poster presentation, October 2007.

Holly A. Yanco, Brenden Keyes, Jill L. Drury, Curtis W. Nielsen, Douglas A. Few and David J. Bruemmer. Evolving Interface Design for Robot Search Tasks. Journal of Field Robotics, Volume 24, Issue 8-9, p. 779-799. August/September 2007.

Mark Micire, Martin Schedlbauer and Holly Yanco. Horizontal Selection: An Evaluation of a Digital Tabletop Input Device. In Proceedings of the 13th Americas Conference on Information Systems, Keystone, CO. August 2007.

Holly A. Yanco, Michael Baker, Robert Casey, Brenden Keyes, Philip Thoren, Jill L. Drury, Douglas Few, Curtis Nielsen and David Bruemmer. Analysis of Human-Robot Interaction for Urban Search and Rescue. Proceedings of the IEEE International Workshop on Safety, Security and Rescue Robotics. National Institute of Standards and Technology (NIST), Gaithersburg, MD, August 22-24, 2006.